自迴歸¶

此筆記本介紹使用 AutoReg 模型進行自迴歸建模。它還涵蓋了 ar_select_order 在選擇使諸如 AIC 等訊息準則最小化的模型方面的輔助。自迴歸模型的動態由下式給出

AutoReg 也允許具有以下項的模型

確定性項 (

trend)n:無確定性項c:常數(預設)ct:常數和時間趨勢t:僅時間趨勢

季節虛擬變數 (

seasonal)True包含 \(s-1\) 個虛擬變數,其中 \(s\) 是時間序列的週期(例如,每月為 12)

自訂確定性項 (

deterministic)接受

DeterministicProcess

外生變數 (

exog)要包含在模型中的外生變數的

DataFrame或array

省略選定的落後期數 (

lags)如果

lags是整數的可迭代物件,則僅將這些包含在模型中。

完整規範為

其中

\(d_i\) 是一個季節虛擬變數,如果 \(mod(t, period) = i\),則為 1。如果模型包含常數(

trend中有c),則排除週期 0。\(t\) 是一個時間趨勢 (\(1,2,\ldots\)),在第一個觀察值中從 1 開始。

\(x_{t,j}\) 是外生迴歸量。注意,在定義模型時,這些在外生變數時間上與左側變數對齊。

\(\epsilon_t\) 假設為白雜訊過程。

第一個單元格匯入標準套件,並設定繪圖以內嵌顯示。

[1]:

%matplotlib inline

import matplotlib.pyplot as plt

import pandas as pd

import pandas_datareader as pdr

import seaborn as sns

from statsmodels.tsa.api import acf, graphics, pacf

from statsmodels.tsa.ar_model import AutoReg, ar_select_order

此單元格設定繪圖樣式,為 matplotlib 註冊 pandas 日期轉換器,並設定預設圖形大小。

[2]:

sns.set_style("darkgrid")

pd.plotting.register_matplotlib_converters()

# Default figure size

sns.mpl.rc("figure", figsize=(16, 6))

sns.mpl.rc("font", size=14)

第一組範例使用美國房屋開工率的月度成長率,該成長率未經季節性調整。季節性可以從規律的峰谷模式中明顯看出。我們將時間序列的頻率設定為「MS」(月份開始),以避免在使用 AutoReg 時出現警告。

[3]:

data = pdr.get_data_fred("HOUSTNSA", "1959-01-01", "2019-06-01")

housing = data.HOUSTNSA.pct_change().dropna()

# Scale by 100 to get percentages

housing = 100 * housing.asfreq("MS")

fig, ax = plt.subplots()

ax = housing.plot(ax=ax)

我們可以從 AR(3) 開始。雖然這不是此數據的好模型,但它演示了 API 的基本用法。

[4]:

mod = AutoReg(housing, 3, old_names=False)

res = mod.fit()

print(res.summary())

AutoReg Model Results

==============================================================================

Dep. Variable: HOUSTNSA No. Observations: 725

Model: AutoReg(3) Log Likelihood -2993.442

Method: Conditional MLE S.D. of innovations 15.289

Date: Thu, 03 Oct 2024 AIC 5996.884

Time: 15:55:21 BIC 6019.794

Sample: 05-01-1959 HQIC 6005.727

- 06-01-2019

===============================================================================

coef std err z P>|z| [0.025 0.975]

-------------------------------------------------------------------------------

const 1.1228 0.573 1.961 0.050 0.000 2.245

HOUSTNSA.L1 0.1910 0.036 5.235 0.000 0.120 0.263

HOUSTNSA.L2 0.0058 0.037 0.155 0.877 -0.067 0.079

HOUSTNSA.L3 -0.1939 0.036 -5.319 0.000 -0.265 -0.122

Roots

=============================================================================

Real Imaginary Modulus Frequency

-----------------------------------------------------------------------------

AR.1 0.9680 -1.3298j 1.6448 -0.1499

AR.2 0.9680 +1.3298j 1.6448 0.1499

AR.3 -1.9064 -0.0000j 1.9064 -0.5000

-----------------------------------------------------------------------------

AutoReg 支援與 OLS 相同的共變異數估計器。以下,我們使用 cov_type="HC0",它是 White 的共變異數估計器。雖然參數估計值相同,但所有取決於標準誤差的量都會發生變化。

[5]:

res = mod.fit(cov_type="HC0")

print(res.summary())

AutoReg Model Results

==============================================================================

Dep. Variable: HOUSTNSA No. Observations: 725

Model: AutoReg(3) Log Likelihood -2993.442

Method: Conditional MLE S.D. of innovations 15.289

Date: Thu, 03 Oct 2024 AIC 5996.884

Time: 15:55:21 BIC 6019.794

Sample: 05-01-1959 HQIC 6005.727

- 06-01-2019

===============================================================================

coef std err z P>|z| [0.025 0.975]

-------------------------------------------------------------------------------

const 1.1228 0.601 1.869 0.062 -0.055 2.300

HOUSTNSA.L1 0.1910 0.035 5.499 0.000 0.123 0.259

HOUSTNSA.L2 0.0058 0.039 0.150 0.881 -0.070 0.081

HOUSTNSA.L3 -0.1939 0.036 -5.448 0.000 -0.264 -0.124

Roots

=============================================================================

Real Imaginary Modulus Frequency

-----------------------------------------------------------------------------

AR.1 0.9680 -1.3298j 1.6448 -0.1499

AR.2 0.9680 +1.3298j 1.6448 0.1499

AR.3 -1.9064 -0.0000j 1.9064 -0.5000

-----------------------------------------------------------------------------

[6]:

sel = ar_select_order(housing, 13, old_names=False)

sel.ar_lags

res = sel.model.fit()

print(res.summary())

AutoReg Model Results

==============================================================================

Dep. Variable: HOUSTNSA No. Observations: 725

Model: AutoReg(13) Log Likelihood -2676.157

Method: Conditional MLE S.D. of innovations 10.378

Date: Thu, 03 Oct 2024 AIC 5382.314

Time: 15:55:21 BIC 5450.835

Sample: 03-01-1960 HQIC 5408.781

- 06-01-2019

================================================================================

coef std err z P>|z| [0.025 0.975]

--------------------------------------------------------------------------------

const 1.3615 0.458 2.970 0.003 0.463 2.260

HOUSTNSA.L1 -0.2900 0.036 -8.161 0.000 -0.360 -0.220

HOUSTNSA.L2 -0.0828 0.031 -2.652 0.008 -0.144 -0.022

HOUSTNSA.L3 -0.0654 0.031 -2.106 0.035 -0.126 -0.005

HOUSTNSA.L4 -0.1596 0.031 -5.166 0.000 -0.220 -0.099

HOUSTNSA.L5 -0.0434 0.031 -1.387 0.165 -0.105 0.018

HOUSTNSA.L6 -0.0884 0.031 -2.867 0.004 -0.149 -0.028

HOUSTNSA.L7 -0.0556 0.031 -1.797 0.072 -0.116 0.005

HOUSTNSA.L8 -0.1482 0.031 -4.803 0.000 -0.209 -0.088

HOUSTNSA.L9 -0.0926 0.031 -2.960 0.003 -0.154 -0.031

HOUSTNSA.L10 -0.1133 0.031 -3.665 0.000 -0.174 -0.053

HOUSTNSA.L11 0.1151 0.031 3.699 0.000 0.054 0.176

HOUSTNSA.L12 0.5352 0.031 17.133 0.000 0.474 0.596

HOUSTNSA.L13 0.3178 0.036 8.937 0.000 0.248 0.388

Roots

==============================================================================

Real Imaginary Modulus Frequency

------------------------------------------------------------------------------

AR.1 1.0913 -0.0000j 1.0913 -0.0000

AR.2 0.8743 -0.5018j 1.0080 -0.0829

AR.3 0.8743 +0.5018j 1.0080 0.0829

AR.4 0.5041 -0.8765j 1.0111 -0.1669

AR.5 0.5041 +0.8765j 1.0111 0.1669

AR.6 0.0056 -1.0530j 1.0530 -0.2491

AR.7 0.0056 +1.0530j 1.0530 0.2491

AR.8 -0.5263 -0.9335j 1.0716 -0.3317

AR.9 -0.5263 +0.9335j 1.0716 0.3317

AR.10 -0.9525 -0.5880j 1.1194 -0.4120

AR.11 -0.9525 +0.5880j 1.1194 0.4120

AR.12 -1.2928 -0.2608j 1.3189 -0.4683

AR.13 -1.2928 +0.2608j 1.3189 0.4683

------------------------------------------------------------------------------

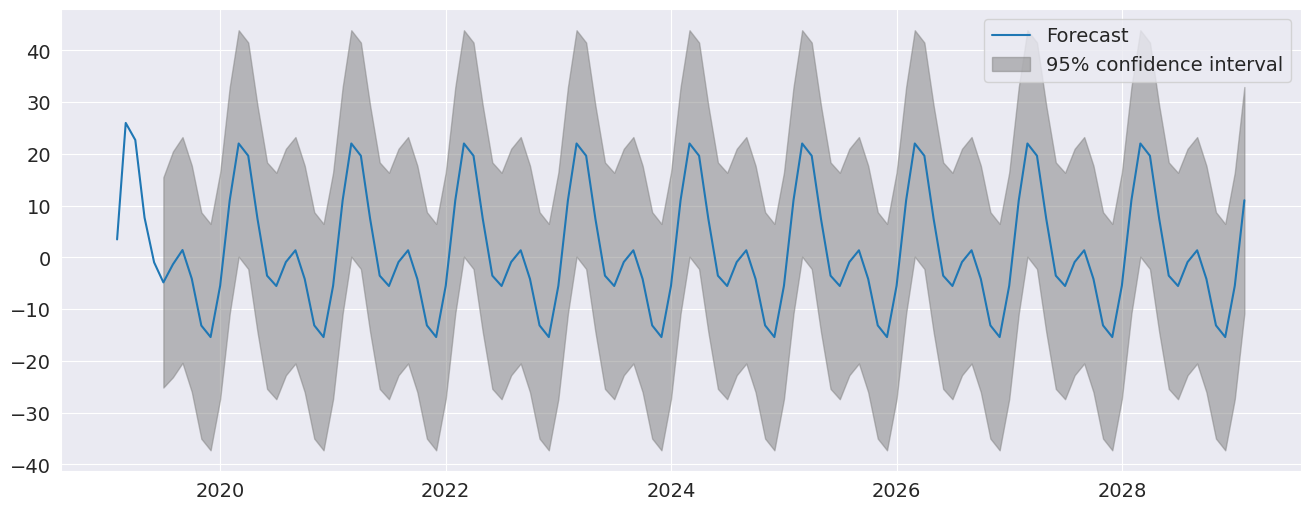

plot_predict 可視覺化預測。這裡我們產生大量的預測,顯示模型捕獲的字串季節性。

[7]:

fig = res.plot_predict(720, 840)

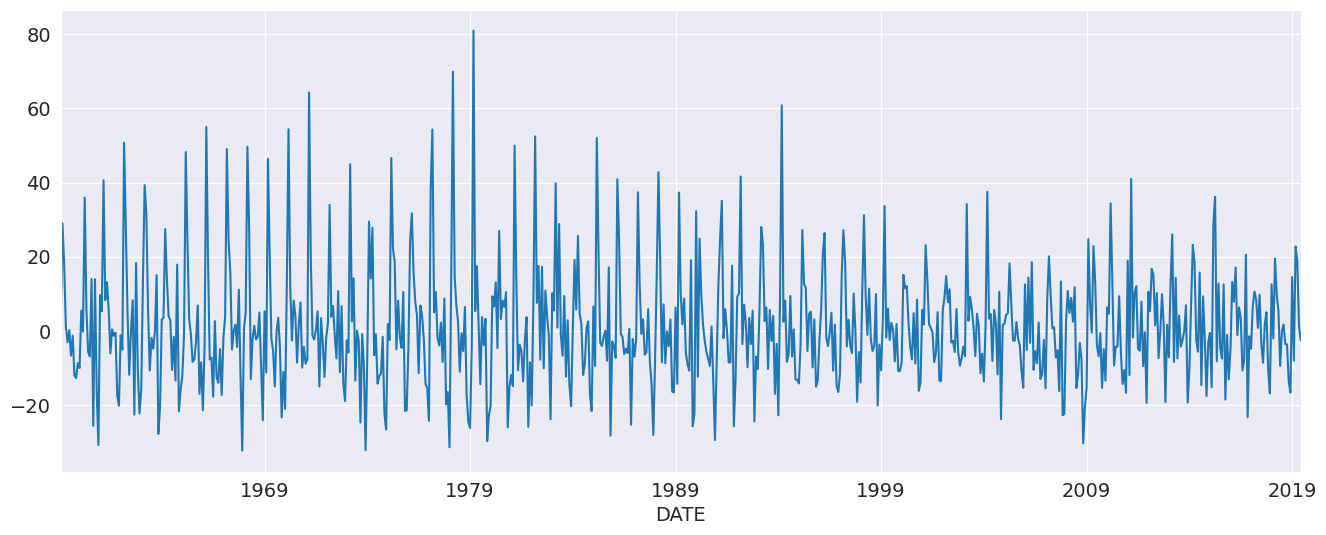

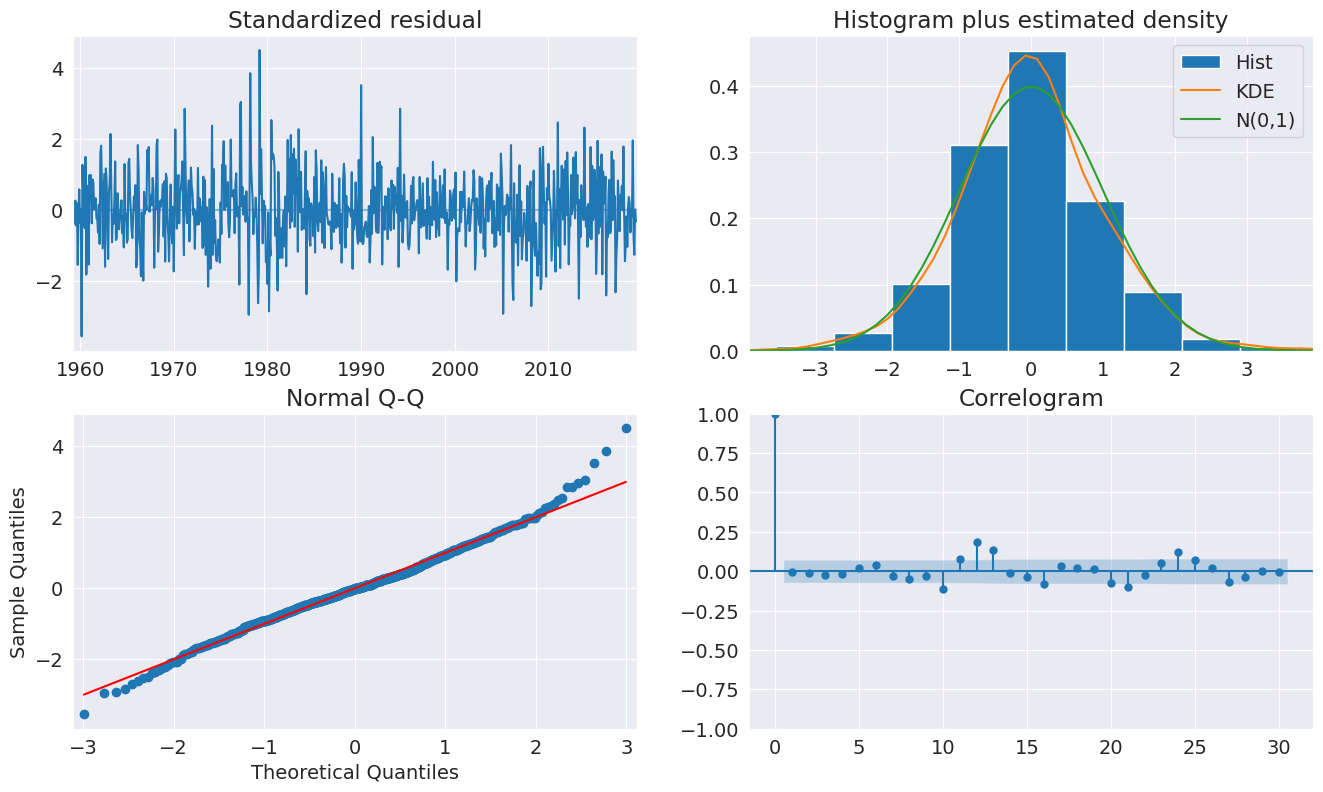

plot_diagnositcs 表明該模型捕獲了數據中的關鍵特徵。

[8]:

fig = plt.figure(figsize=(16, 9))

fig = res.plot_diagnostics(fig=fig, lags=30)

季節虛擬變數¶

AutoReg 支援季節虛擬變數,這是對季節性建模的替代方法。包含虛擬變數可將動態縮短為僅 AR(2)。

[9]:

sel = ar_select_order(housing, 13, seasonal=True, old_names=False)

sel.ar_lags

res = sel.model.fit()

print(res.summary())

AutoReg Model Results

==============================================================================

Dep. Variable: HOUSTNSA No. Observations: 725

Model: Seas. AutoReg(2) Log Likelihood -2652.556

Method: Conditional MLE S.D. of innovations 9.487

Date: Thu, 03 Oct 2024 AIC 5335.112

Time: 15:55:27 BIC 5403.863

Sample: 04-01-1959 HQIC 5361.648

- 06-01-2019

===============================================================================

coef std err z P>|z| [0.025 0.975]

-------------------------------------------------------------------------------

const 1.2726 1.373 0.927 0.354 -1.418 3.963

s(2,12) 32.6477 1.824 17.901 0.000 29.073 36.222

s(3,12) 23.0685 2.435 9.472 0.000 18.295 27.842

s(4,12) 10.7267 2.693 3.983 0.000 5.449 16.005

s(5,12) 1.6792 2.100 0.799 0.424 -2.437 5.796

s(6,12) -4.4229 1.896 -2.333 0.020 -8.138 -0.707

s(7,12) -4.2113 1.824 -2.309 0.021 -7.786 -0.636

s(8,12) -6.4124 1.791 -3.581 0.000 -9.922 -2.902

s(9,12) 0.1095 1.800 0.061 0.952 -3.419 3.638

s(10,12) -16.7511 1.814 -9.234 0.000 -20.307 -13.196

s(11,12) -20.7023 1.862 -11.117 0.000 -24.352 -17.053

s(12,12) -11.9554 1.778 -6.724 0.000 -15.440 -8.470

HOUSTNSA.L1 -0.2953 0.037 -7.994 0.000 -0.368 -0.223

HOUSTNSA.L2 -0.1148 0.037 -3.107 0.002 -0.187 -0.042

Roots

=============================================================================

Real Imaginary Modulus Frequency

-----------------------------------------------------------------------------

AR.1 -1.2862 -2.6564j 2.9514 -0.3218

AR.2 -1.2862 +2.6564j 2.9514 0.3218

-----------------------------------------------------------------------------

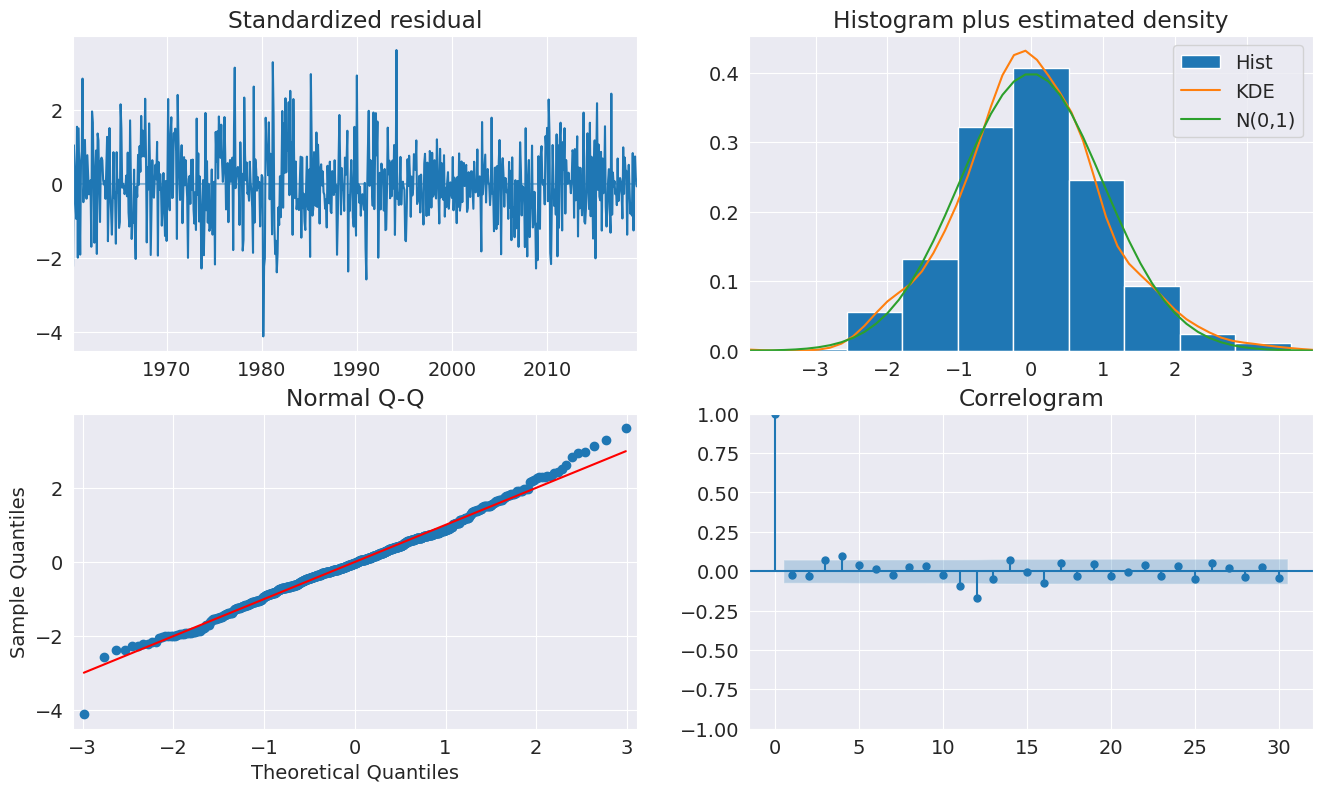

季節虛擬變數在預測中很明顯,預測在未來 10 年的所有期間都具有顯著的季節性成分。

[10]:

fig = res.plot_predict(720, 840)

[11]:

fig = plt.figure(figsize=(16, 9))

fig = res.plot_diagnostics(lags=30, fig=fig)

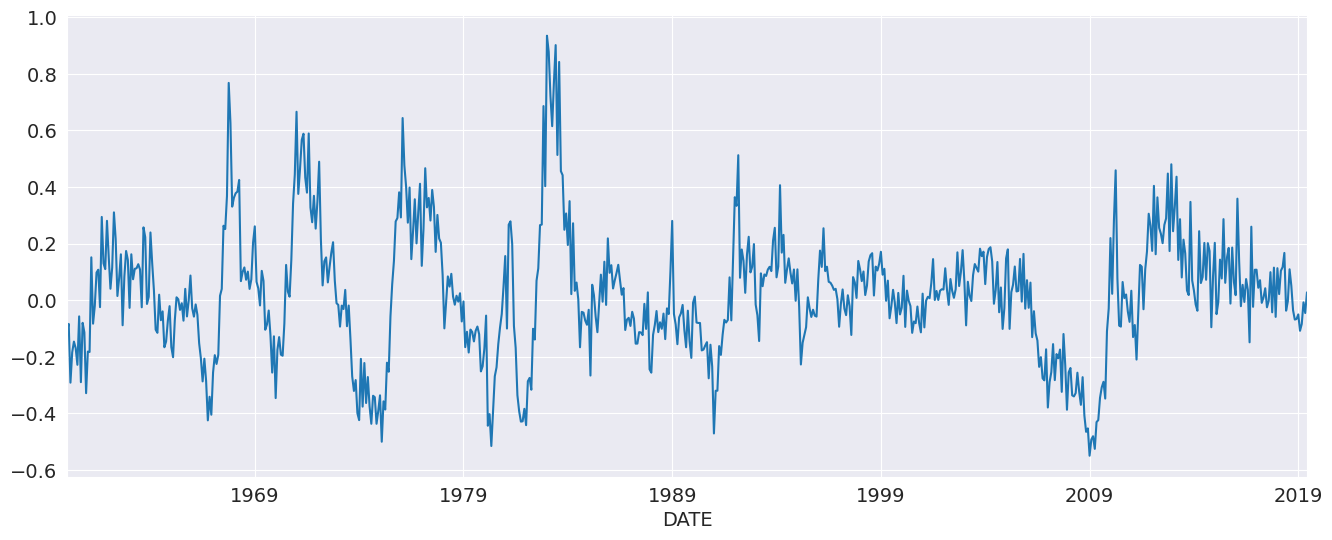

季節動態¶

雖然 AutoReg 不直接支援季節成分,因為它使用 OLS 來估計參數,但可以使用過參數化的季節 AR 來捕獲季節動態,該季節 AR 不會強加季節 AR 中的限制。

[12]:

yoy_housing = data.HOUSTNSA.pct_change(12).resample("MS").last().dropna()

_, ax = plt.subplots()

ax = yoy_housing.plot(ax=ax)

我們首先使用僅選擇最大落後期的簡單方法來選擇模型。所有較低的落後期都會自動包含。要檢查的最大落後期設定為 13,因為這允許模型嵌套一個具有短期 AR(1) 成分和季節性 AR(1) 成分的季節性 AR,因此

變成

展開時。AutoReg 不強制執行結構,但可以估計嵌套模型

我們看到選擇了所有 13 個落後期。

[13]:

sel = ar_select_order(yoy_housing, 13, old_names=False)

sel.ar_lags

[13]:

[1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13]

似乎不太可能需要所有 13 個落後期。我們可以設定 glob=True 來搜尋所有包含最多 13 個落後期的 \(2^{13}\) 個模型。

在這裡,我們看到選擇了前三個,第 7 個也被選擇,最後選擇了第 12 個和第 13 個。這在表面上與上述結構相似。

在擬合模型後,我們看一下診斷圖,該圖表明此規範似乎足以捕獲數據中的動態。

[14]:

sel = ar_select_order(yoy_housing, 13, glob=True, old_names=False)

sel.ar_lags

res = sel.model.fit()

print(res.summary())

AutoReg Model Results

==============================================================================

Dep. Variable: HOUSTNSA No. Observations: 714

Model: Restr. AutoReg(13) Log Likelihood 589.177

Method: Conditional MLE S.D. of innovations 0.104

Date: Thu, 03 Oct 2024 AIC -1162.353

Time: 15:55:45 BIC -1125.933

Sample: 02-01-1961 HQIC -1148.276

- 06-01-2019

================================================================================

coef std err z P>|z| [0.025 0.975]

--------------------------------------------------------------------------------

const 0.0035 0.004 0.875 0.382 -0.004 0.011

HOUSTNSA.L1 0.5640 0.035 16.167 0.000 0.496 0.632

HOUSTNSA.L2 0.2347 0.038 6.238 0.000 0.161 0.308

HOUSTNSA.L3 0.2051 0.037 5.560 0.000 0.133 0.277

HOUSTNSA.L7 -0.0903 0.030 -2.976 0.003 -0.150 -0.031

HOUSTNSA.L12 -0.3791 0.034 -11.075 0.000 -0.446 -0.312

HOUSTNSA.L13 0.3354 0.033 10.254 0.000 0.271 0.400

Roots

==============================================================================

Real Imaginary Modulus Frequency

------------------------------------------------------------------------------

AR.1 -1.0309 -0.2682j 1.0652 -0.4595

AR.2 -1.0309 +0.2682j 1.0652 0.4595

AR.3 -0.7454 -0.7417j 1.0515 -0.3754

AR.4 -0.7454 +0.7417j 1.0515 0.3754

AR.5 -0.3172 -1.0221j 1.0702 -0.2979

AR.6 -0.3172 +1.0221j 1.0702 0.2979

AR.7 0.2419 -1.0573j 1.0846 -0.2142

AR.8 0.2419 +1.0573j 1.0846 0.2142

AR.9 0.7840 -0.8303j 1.1420 -0.1296

AR.10 0.7840 +0.8303j 1.1420 0.1296

AR.11 1.0730 -0.2386j 1.0992 -0.0348

AR.12 1.0730 +0.2386j 1.0992 0.0348

AR.13 1.1193 -0.0000j 1.1193 -0.0000

------------------------------------------------------------------------------

[15]:

fig = plt.figure(figsize=(16, 9))

fig = res.plot_diagnostics(fig=fig, lags=30)

我們還可以包含季節虛擬變數。由於模型使用的是年同比變化,因此這些都是不顯著的。

[16]:

sel = ar_select_order(yoy_housing, 13, glob=True, seasonal=True, old_names=False)

sel.ar_lags

res = sel.model.fit()

print(res.summary())

AutoReg Model Results

====================================================================================

Dep. Variable: HOUSTNSA No. Observations: 714

Model: Restr. Seas. AutoReg(13) Log Likelihood 590.875

Method: Conditional MLE S.D. of innovations 0.104

Date: Thu, 03 Oct 2024 AIC -1143.751

Time: 16:08:11 BIC -1057.253

Sample: 02-01-1961 HQIC -1110.317

- 06-01-2019

================================================================================

coef std err z P>|z| [0.025 0.975]

--------------------------------------------------------------------------------

const 0.0167 0.014 1.215 0.224 -0.010 0.044

s(2,12) -0.0179 0.019 -0.931 0.352 -0.056 0.020

s(3,12) -0.0121 0.019 -0.630 0.528 -0.050 0.026

s(4,12) -0.0210 0.019 -1.089 0.276 -0.059 0.017

s(5,12) -0.0223 0.019 -1.157 0.247 -0.060 0.015

s(6,12) -0.0224 0.019 -1.160 0.246 -0.060 0.015

s(7,12) -0.0212 0.019 -1.096 0.273 -0.059 0.017

s(8,12) -0.0101 0.019 -0.520 0.603 -0.048 0.028

s(9,12) -0.0095 0.019 -0.491 0.623 -0.047 0.028

s(10,12) -0.0049 0.019 -0.252 0.801 -0.043 0.033

s(11,12) -0.0084 0.019 -0.435 0.664 -0.046 0.030

s(12,12) -0.0077 0.019 -0.400 0.689 -0.046 0.030

HOUSTNSA.L1 0.5630 0.035 16.160 0.000 0.495 0.631

HOUSTNSA.L2 0.2347 0.038 6.248 0.000 0.161 0.308

HOUSTNSA.L3 0.2075 0.037 5.634 0.000 0.135 0.280

HOUSTNSA.L7 -0.0916 0.030 -3.013 0.003 -0.151 -0.032

HOUSTNSA.L12 -0.3810 0.034 -11.149 0.000 -0.448 -0.314

HOUSTNSA.L13 0.3373 0.033 10.327 0.000 0.273 0.401

Roots

==============================================================================

Real Imaginary Modulus Frequency

------------------------------------------------------------------------------

AR.1 -1.0305 -0.2681j 1.0648 -0.4595

AR.2 -1.0305 +0.2681j 1.0648 0.4595

AR.3 -0.7447 -0.7414j 1.0509 -0.3754

AR.4 -0.7447 +0.7414j 1.0509 0.3754

AR.5 -0.3172 -1.0215j 1.0696 -0.2979

AR.6 -0.3172 +1.0215j 1.0696 0.2979

AR.7 0.2416 -1.0568j 1.0841 -0.2142

AR.8 0.2416 +1.0568j 1.0841 0.2142

AR.9 0.7837 -0.8304j 1.1418 -0.1296

AR.10 0.7837 +0.8304j 1.1418 0.1296

AR.11 1.0724 -0.2383j 1.0986 -0.0348

AR.12 1.0724 +0.2383j 1.0986 0.0348

AR.13 1.1192 -0.0000j 1.1192 -0.0000

------------------------------------------------------------------------------

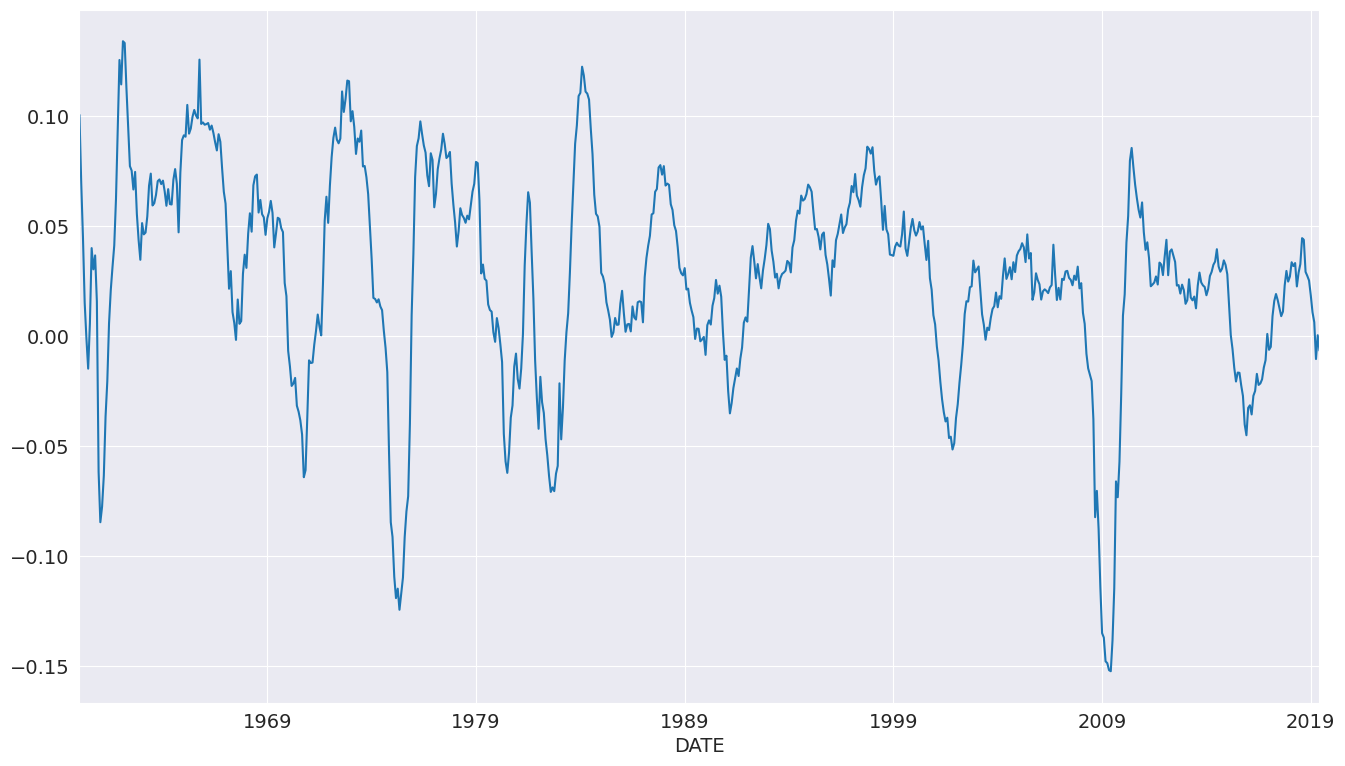

工業生產¶

我們將使用工業生產指數數據來檢查預測。

[17]:

data = pdr.get_data_fred("INDPRO", "1959-01-01", "2019-06-01")

ind_prod = data.INDPRO.pct_change(12).dropna().asfreq("MS")

_, ax = plt.subplots(figsize=(16, 9))

ind_prod.plot(ax=ax)

[17]:

<Axes: xlabel='DATE'>

我們將首先使用最多 12 個落後期來選擇模型。儘管許多係數不顯著,但 AR(13) 使 BIC 標準最小化。

[18]:

sel = ar_select_order(ind_prod, 13, "bic", old_names=False)

res = sel.model.fit()

print(res.summary())

AutoReg Model Results

==============================================================================

Dep. Variable: INDPRO No. Observations: 714

Model: AutoReg(13) Log Likelihood 2321.382

Method: Conditional MLE S.D. of innovations 0.009

Date: Thu, 03 Oct 2024 AIC -4612.763

Time: 16:08:12 BIC -4544.476

Sample: 02-01-1961 HQIC -4586.368

- 06-01-2019

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

const 0.0012 0.000 2.783 0.005 0.000 0.002

INDPRO.L1 1.1577 0.035 33.179 0.000 1.089 1.226

INDPRO.L2 -0.0822 0.053 -1.543 0.123 -0.187 0.022

INDPRO.L3 7.041e-05 0.053 0.001 0.999 -0.104 0.104

INDPRO.L4 0.0076 0.053 0.143 0.886 -0.096 0.111

INDPRO.L5 -0.1329 0.053 -2.529 0.011 -0.236 -0.030

INDPRO.L6 -0.0080 0.052 -0.153 0.879 -0.111 0.095

INDPRO.L7 0.0556 0.052 1.064 0.287 -0.047 0.158

INDPRO.L8 -0.0296 0.052 -0.568 0.570 -0.132 0.073

INDPRO.L9 0.0920 0.052 1.770 0.077 -0.010 0.194

INDPRO.L10 -0.0808 0.052 -1.552 0.121 -0.183 0.021

INDPRO.L11 -0.0003 0.052 -0.005 0.996 -0.102 0.102

INDPRO.L12 -0.3820 0.052 -7.364 0.000 -0.484 -0.280

INDPRO.L13 0.3613 0.033 10.996 0.000 0.297 0.426

Roots

==============================================================================

Real Imaginary Modulus Frequency

------------------------------------------------------------------------------

AR.1 -1.0408 -0.2913j 1.0808 -0.4566

AR.2 -1.0408 +0.2913j 1.0808 0.4566

AR.3 -0.7803 -0.8039j 1.1204 -0.3726

AR.4 -0.7803 +0.8039j 1.1204 0.3726

AR.5 -0.2728 -1.0533j 1.0880 -0.2903

AR.6 -0.2728 +1.0533j 1.0880 0.2903

AR.7 0.2716 -1.0507j 1.0852 -0.2097

AR.8 0.2716 +1.0507j 1.0852 0.2097

AR.9 0.8010 -0.7285j 1.0827 -0.1175

AR.10 0.8010 +0.7285j 1.0827 0.1175

AR.11 1.0219 -0.2219j 1.0457 -0.0340

AR.12 1.0219 +0.2219j 1.0457 0.0340

AR.13 1.0560 -0.0000j 1.0560 -0.0000

------------------------------------------------------------------------------

我們也可以使用全域搜尋,如果需要,它允許輸入較長的落後期,而不需要較短的落後期。在這裡,我們看到許多落後期被刪除。該模型表明數據中可能存在一些季節性。

[19]:

sel = ar_select_order(ind_prod, 13, "bic", glob=True, old_names=False)

sel.ar_lags

res_glob = sel.model.fit()

print(res.summary())

AutoReg Model Results

==============================================================================

Dep. Variable: INDPRO No. Observations: 714

Model: AutoReg(13) Log Likelihood 2321.382

Method: Conditional MLE S.D. of innovations 0.009

Date: Thu, 03 Oct 2024 AIC -4612.763

Time: 16:08:14 BIC -4544.476

Sample: 02-01-1961 HQIC -4586.368

- 06-01-2019

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

const 0.0012 0.000 2.783 0.005 0.000 0.002

INDPRO.L1 1.1577 0.035 33.179 0.000 1.089 1.226

INDPRO.L2 -0.0822 0.053 -1.543 0.123 -0.187 0.022

INDPRO.L3 7.041e-05 0.053 0.001 0.999 -0.104 0.104

INDPRO.L4 0.0076 0.053 0.143 0.886 -0.096 0.111

INDPRO.L5 -0.1329 0.053 -2.529 0.011 -0.236 -0.030

INDPRO.L6 -0.0080 0.052 -0.153 0.879 -0.111 0.095

INDPRO.L7 0.0556 0.052 1.064 0.287 -0.047 0.158

INDPRO.L8 -0.0296 0.052 -0.568 0.570 -0.132 0.073

INDPRO.L9 0.0920 0.052 1.770 0.077 -0.010 0.194

INDPRO.L10 -0.0808 0.052 -1.552 0.121 -0.183 0.021

INDPRO.L11 -0.0003 0.052 -0.005 0.996 -0.102 0.102

INDPRO.L12 -0.3820 0.052 -7.364 0.000 -0.484 -0.280

INDPRO.L13 0.3613 0.033 10.996 0.000 0.297 0.426

Roots

==============================================================================

Real Imaginary Modulus Frequency

------------------------------------------------------------------------------

AR.1 -1.0408 -0.2913j 1.0808 -0.4566

AR.2 -1.0408 +0.2913j 1.0808 0.4566

AR.3 -0.7803 -0.8039j 1.1204 -0.3726

AR.4 -0.7803 +0.8039j 1.1204 0.3726

AR.5 -0.2728 -1.0533j 1.0880 -0.2903

AR.6 -0.2728 +1.0533j 1.0880 0.2903

AR.7 0.2716 -1.0507j 1.0852 -0.2097

AR.8 0.2716 +1.0507j 1.0852 0.2097

AR.9 0.8010 -0.7285j 1.0827 -0.1175

AR.10 0.8010 +0.7285j 1.0827 0.1175

AR.11 1.0219 -0.2219j 1.0457 -0.0340

AR.12 1.0219 +0.2219j 1.0457 0.0340

AR.13 1.0560 -0.0000j 1.0560 -0.0000

------------------------------------------------------------------------------

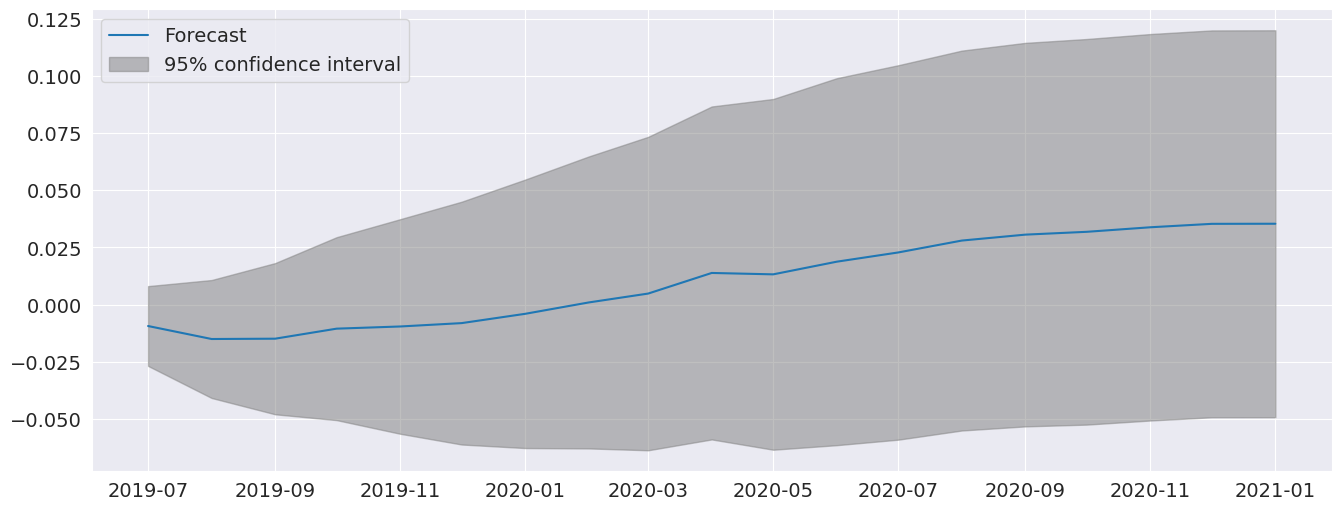

plot_predict 可用於產生預測圖以及信賴區間。在這裡,我們產生從最後一個觀察值開始並持續 18 個月的預測。

[20]:

ind_prod.shape

[20]:

(714,)

[21]:

fig = res_glob.plot_predict(start=714, end=732)

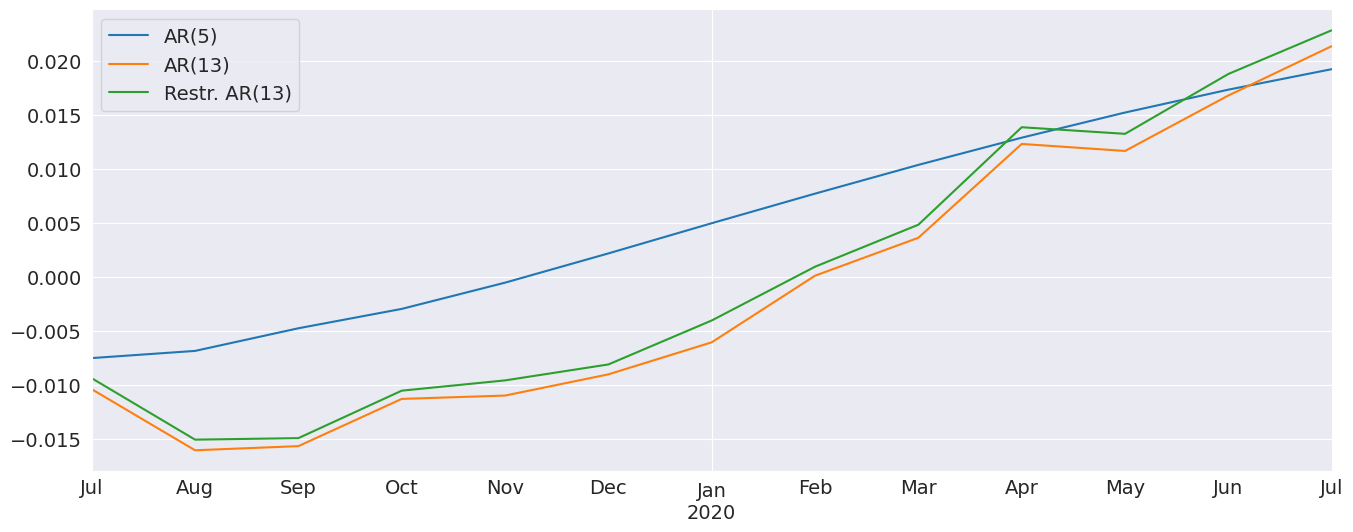

完整模型和限制模型的預測非常相似。我還包含了一個 AR(5),它具有非常不同的動態

[22]:

res_ar5 = AutoReg(ind_prod, 5, old_names=False).fit()

predictions = pd.DataFrame(

{

"AR(5)": res_ar5.predict(start=714, end=726),

"AR(13)": res.predict(start=714, end=726),

"Restr. AR(13)": res_glob.predict(start=714, end=726),

}

)

_, ax = plt.subplots()

ax = predictions.plot(ax=ax)

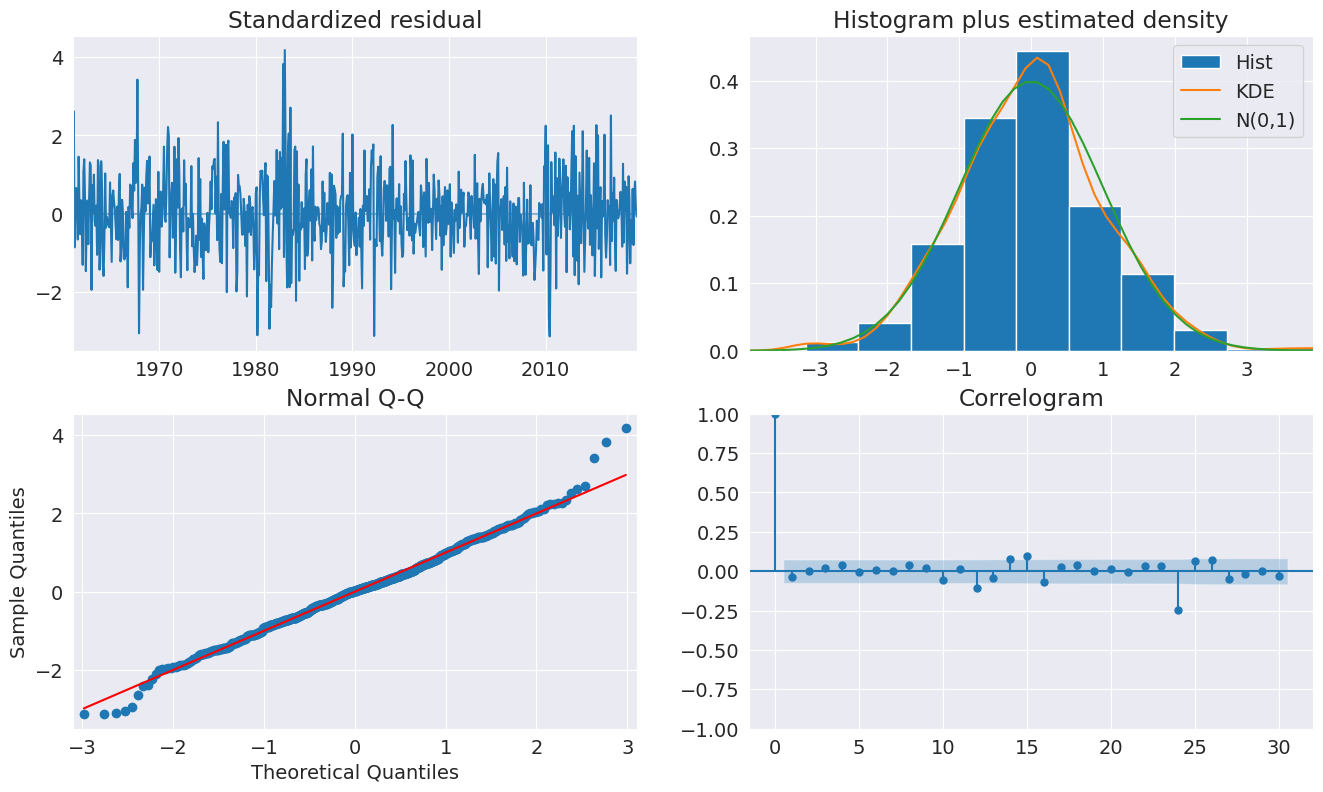

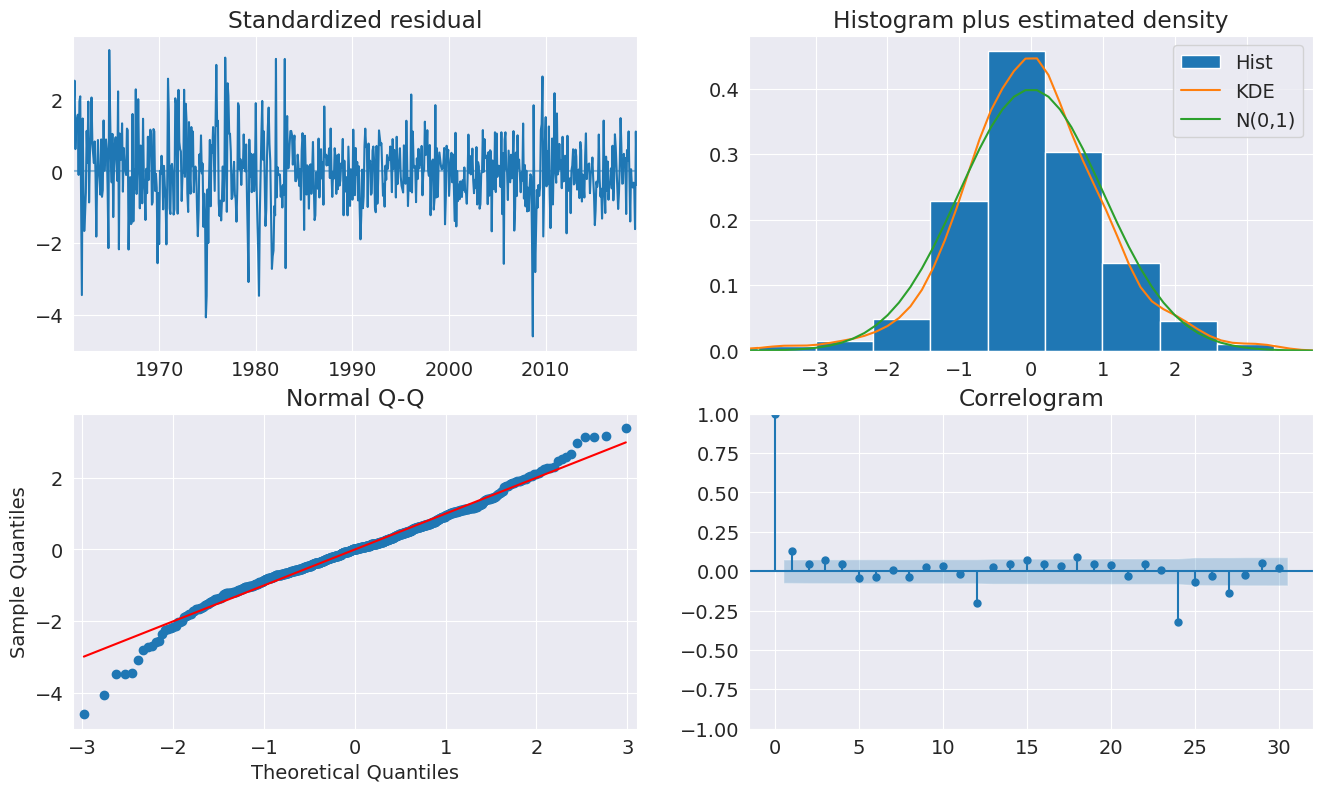

診斷表明該模型捕獲了數據中的大部分動態。ACF 顯示季節性頻率的模式,因此可能需要更完整的季節性模型 (SARIMAX)。

[23]:

fig = plt.figure(figsize=(16, 9))

fig = res_glob.plot_diagnostics(fig=fig, lags=30)

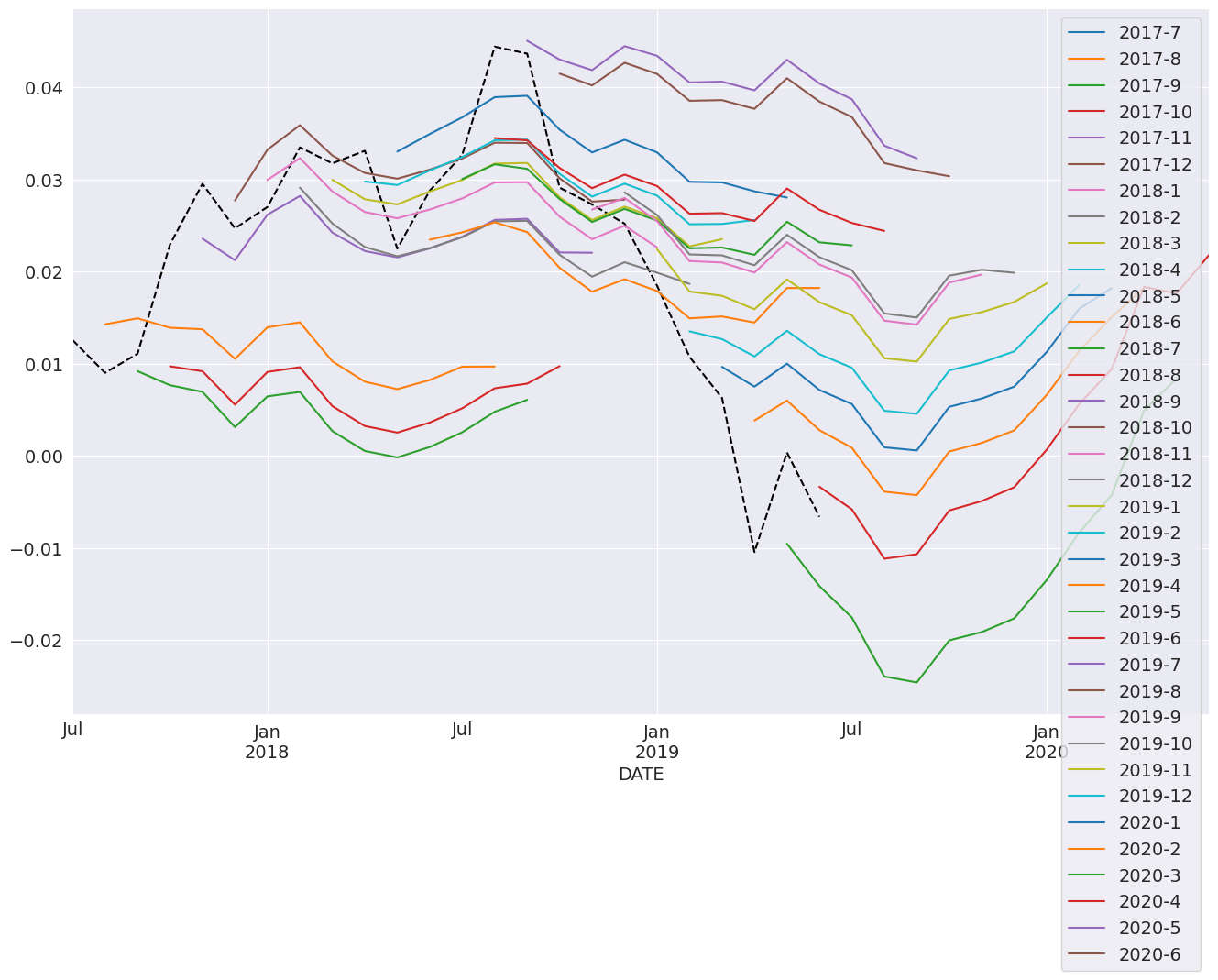

預測¶

預測是使用結果實例的 predict 方法產生的。預設產生靜態預測,這是一步預測。產生多步預測需要使用 dynamic=True。

在下一個單元格中,我們產生樣本中最後 24 個期間的 12 步向前預測。這需要一個迴圈。

注意:這些技術上是樣本內預測,因為我們預測的數據用於估計參數。產生 OOS 預測需要兩個模型。第一個必須排除 OOS 期間。第二個使用完整樣本模型的 predict 方法,其中包含來自排除 OOS 期間的較短樣本模型的參數。

[24]:

import numpy as np

start = ind_prod.index[-24]

forecast_index = pd.date_range(start, freq=ind_prod.index.freq, periods=36)

cols = ["-".join(str(val) for val in (idx.year, idx.month)) for idx in forecast_index]

forecasts = pd.DataFrame(index=forecast_index, columns=cols)

for i in range(1, 24):

fcast = res_glob.predict(

start=forecast_index[i], end=forecast_index[i + 12], dynamic=True

)

forecasts.loc[fcast.index, cols[i]] = fcast

_, ax = plt.subplots(figsize=(16, 10))

ind_prod.iloc[-24:].plot(ax=ax, color="black", linestyle="--")

ax = forecasts.plot(ax=ax)

與 SARIMAX 比較¶

SARIMAX 是具有外生迴歸量模型的季節性自迴歸積分移動平均的實作。它支援

指定季節性和非季節性 AR 和 MA 成分

包含外生變數

使用卡爾曼濾波器的完整最大概似估計

這個模型比 AutoReg 具有更豐富的功能。與 SARIMAX 不同,AutoReg 使用 OLS 估計參數。這樣速度更快,且問題是全局凸的,因此沒有局部最小值問題。當比較 AR(P) 模型時,封閉形式的估計器及其性能是 AutoReg 相較於 SARIMAX 的主要優勢。AutoReg 也支援季節性虛擬變數,如果使用者將它們作為外生迴歸變數包含在內,則可以與 SARIMAX 一起使用。

[25]:

from statsmodels.tsa.api import SARIMAX

sarimax_mod = SARIMAX(ind_prod, order=((1, 5, 12, 13), 0, 0), trend="c")

sarimax_res = sarimax_mod.fit()

print(sarimax_res.summary())

RUNNING THE L-BFGS-B CODE

* * *

Machine precision = 2.220D-16

N = 6 M = 10

At X0 0 variables are exactly at the bounds

At iterate 0 f= -3.21907D+00 |proj g|= 1.78480D+01

This problem is unconstrained.

At iterate 5 f= -3.22490D+00 |proj g|= 1.52242D-01

At iterate 10 f= -3.22526D+00 |proj g|= 1.46006D+00

At iterate 15 f= -3.22550D+00 |proj g|= 7.46711D-01

At iterate 20 f= -3.22610D+00 |proj g|= 2.52890D-01

At iterate 25 f= -3.22611D+00 |proj g|= 1.80088D-01

At iterate 30 f= -3.22646D+00 |proj g|= 1.46895D+00

At iterate 35 f= -3.22677D+00 |proj g|= 1.05336D-01

At iterate 40 f= -3.22678D+00 |proj g|= 3.20093D-02

* * *

Tit = total number of iterations

Tnf = total number of function evaluations

Tnint = total number of segments explored during Cauchy searches

Skip = number of BFGS updates skipped

Nact = number of active bounds at final generalized Cauchy point

Projg = norm of the final projected gradient

F = final function value

* * *

N Tit Tnf Tnint Skip Nact Projg F

6 41 64 1 0 0 3.201D-02 -3.227D+00

F = -3.2267786140514940

CONVERGENCE: REL_REDUCTION_OF_F_<=_FACTR*EPSMCH

SARIMAX Results

=========================================================================================

Dep. Variable: INDPRO No. Observations: 714

Model: SARIMAX([1, 5, 12, 13], 0, 0) Log Likelihood 2303.920

Date: Thu, 03 Oct 2024 AIC -4595.840

Time: 16:08:18 BIC -4568.415

Sample: 01-01-1960 HQIC -4585.248

- 06-01-2019

Covariance Type: opg

==============================================================================

coef std err z P>|z| [0.025 0.975]

------------------------------------------------------------------------------

intercept 0.0011 0.000 2.534 0.011 0.000 0.002

ar.L1 1.0801 0.010 107.331 0.000 1.060 1.100

ar.L5 -0.0847 0.011 -7.592 0.000 -0.107 -0.063

ar.L12 -0.4428 0.026 -17.302 0.000 -0.493 -0.393

ar.L13 0.4073 0.025 16.213 0.000 0.358 0.457

sigma2 9.136e-05 3.08e-06 29.630 0.000 8.53e-05 9.74e-05

===================================================================================

Ljung-Box (L1) (Q): 21.77 Jarque-Bera (JB): 959.65

Prob(Q): 0.00 Prob(JB): 0.00

Heteroskedasticity (H): 0.37 Skew: -0.63

Prob(H) (two-sided): 0.00 Kurtosis: 8.54

===================================================================================

Warnings:

[1] Covariance matrix calculated using the outer product of gradients (complex-step).

Warning: more than 10 function and gradient

evaluations in the last line search. Termination

may possibly be caused by a bad search direction.

[26]:

sarimax_params = sarimax_res.params.iloc[:-1].copy()

sarimax_params.index = res_glob.params.index

params = pd.concat([res_glob.params, sarimax_params], axis=1, sort=False)

params.columns = ["AutoReg", "SARIMAX"]

params

[26]:

| AutoReg | SARIMAX | |

|---|---|---|

| 常數項 | 0.001234 | 0.001085 |

| INDPRO.L1 | 1.088761 | 1.080116 |

| INDPRO.L5 | -0.105665 | -0.084738 |

| INDPRO.L12 | -0.388374 | -0.442793 |

| INDPRO.L13 | 0.362319 | 0.407338 |

自定義確定性過程¶

deterministic 參數允許使用自定義的 DeterministicProcess。這允許構建更複雜的確定性項,例如包含兩個週期的季節性分量,或者如下一個範例所示,使用傅立葉級數而不是季節性虛擬變數。

[27]:

from statsmodels.tsa.deterministic import DeterministicProcess

dp = DeterministicProcess(housing.index, constant=True, period=12, fourier=2)

mod = AutoReg(housing, 2, trend="n", seasonal=False, deterministic=dp)

res = mod.fit()

print(res.summary())

AutoReg Model Results

==============================================================================

Dep. Variable: HOUSTNSA No. Observations: 725

Model: AutoReg(2) Log Likelihood -2716.505

Method: Conditional MLE S.D. of innovations 10.364

Date: Thu, 03 Oct 2024 AIC 5449.010

Time: 16:08:18 BIC 5485.677

Sample: 04-01-1959 HQIC 5463.163

- 06-01-2019

===============================================================================

coef std err z P>|z| [0.025 0.975]

-------------------------------------------------------------------------------

const 1.7550 0.391 4.485 0.000 0.988 2.522

sin(1,12) 16.7443 0.860 19.478 0.000 15.059 18.429

cos(1,12) 4.9409 0.588 8.409 0.000 3.789 6.093

sin(2,12) 12.9364 0.619 20.889 0.000 11.723 14.150

cos(2,12) -0.4738 0.754 -0.628 0.530 -1.952 1.004

HOUSTNSA.L1 -0.3905 0.037 -10.664 0.000 -0.462 -0.319

HOUSTNSA.L2 -0.1746 0.037 -4.769 0.000 -0.246 -0.103

Roots

=============================================================================

Real Imaginary Modulus Frequency

-----------------------------------------------------------------------------

AR.1 -1.1182 -2.1159j 2.3932 -0.3274

AR.2 -1.1182 +2.1159j 2.3932 0.3274

-----------------------------------------------------------------------------

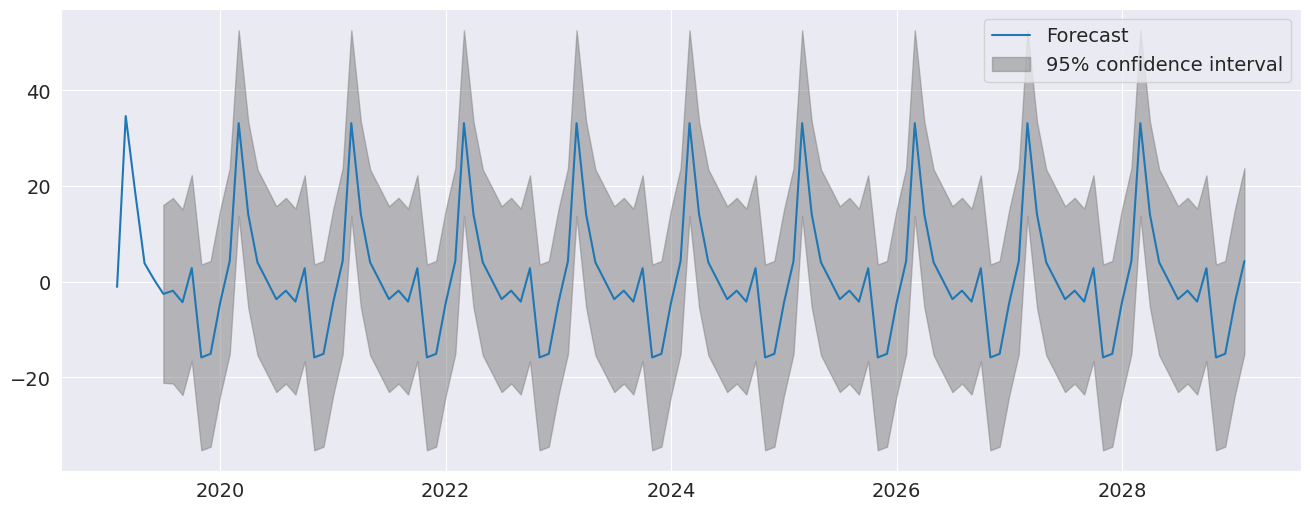

[28]:

fig = res.plot_predict(720, 840)